It then stores the result in a local vector database using Chroma vector store.

ingest.py uses LangChain tools to parse the document and create embeddings locally using HuggingFaceEmbeddings ( SentenceTransformers). Selecting the right local models and the power of LangChain you can run the entire pipeline locally, without any data leaving your environment, and with reasonable performance. You can see a full list of these arguments by running the command python privateGPT.py -help in your terminal. The script also supports optional command-line arguments to modify its behavior. No data gets out of your local environment. Note: you could turn off your internet connection, and the script inference would still work. Once done, it will print the answer and the 4 sources it used as context from your documents you can then ask another question without re-running the script, just wait for the prompt again. You'll need to wait 20-30 seconds (depending on your machine) while the LLM model consumes the prompt and prepares the answer. In order to ask a question, run a command like: Ask questions to your documents, locally! You could ingest without an internet connection, except for the first time you run the ingest script, when the embeddings model is downloaded. Note: during the ingest process no data leaves your local environment. If you want to start from an empty database, delete the db folder. You can ingest as many documents as you want, and all will be accumulated in the local embeddings database.

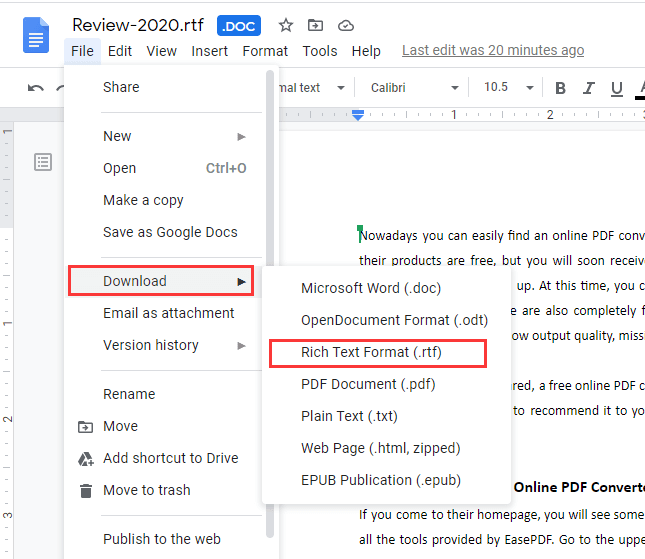

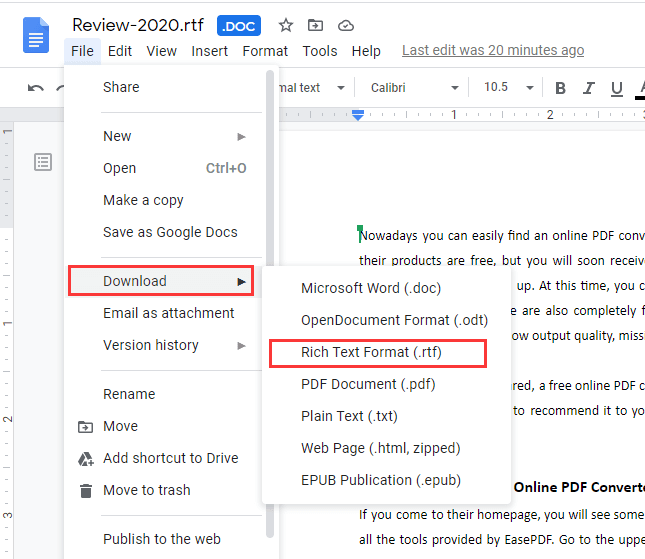

Will take 20-30 seconds per document, depending on the size of the document. It will create a db folder containing the local vectorstore. Ingestion complete ! You can now run privateGPT.py to query your documents Using embedded DuckDB with persistence: data will be stored in: db

Loaded 1 new documents from source_documents Run the following command to ingest all the data. Put any and all your files into the source_documents directory Instructions for ingesting your own dataset This repo uses a state of the union transcript as an example. Note: because of the way langchain loads the SentenceTransformers embeddings, the first time you run the script it will require internet connection to download the embeddings model itself. TARGET_SOURCE_CHUNKS: The amount of chunks (sources) that will be used to answer a question Optimal value differs a lot depending on the model (8 works well for GPT4All, and 1024 is better for LlamaCpp)ĮMBEDDINGS_MODEL_NAME: SentenceTransformers embeddings model name (see ) MODEL_N_BATCH: Number of tokens in the prompt that are fed into the model at a time. MODEL_N_CTX: Maximum token limit for the LLM model MODEL_PATH: Path to your GPT4All or LlamaCpp supported LLM PERSIST_DIRECTORY: is the folder you want your vectorstore in

0 kommentar(er)

0 kommentar(er)